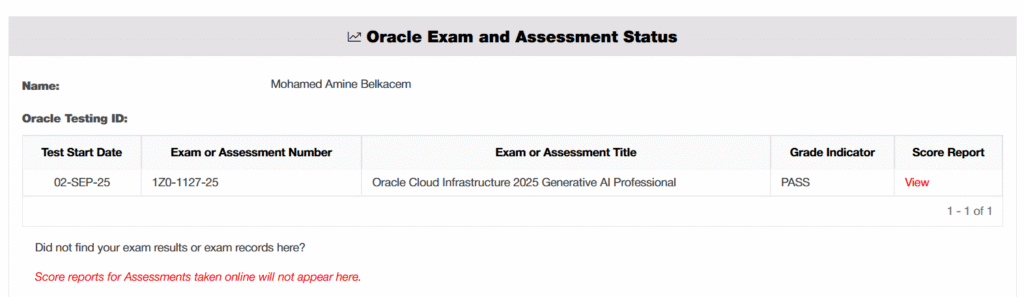

Recently, on 02 September 2025, I took the Oracle Cloud Infrastructure Generative AI Professional certification exam (1Z0-1127-25), and I passed. As a mechatronics student who’s focused on robotics and AI, I wanted to see if this certification was worth the effort.

Here’s the story of how I prepared, what the exam was like, and why it matters for engineers like me.

Why I Decided to Take the Exam

With ChatGPT receiving over 5.24 billion visits each month, alongside other models like Claude, Gemini, Perplexity, etc… It’s safe to say that AI, and specifically, LLMs (Large Language Models) are taking their place in our lives.

Companies are already integrating AI agents into workflows to improve customer support, while countless SaaS products now rely on specialized AI solutions.

As someone deeply involved in robotics, one of the reasons I chose it as a major and career path is its strong integration of software, especially AI. Many of our projects require model training, and I’ve learned that training from scratch can be very expensive. Instead, we often start with pre-built models and adapt them using techniques like fine-tuning and retrieval-augmented generation (RAG).

Taking the Oracle Generative AI Professional 2025 exam was exciting and promising for me because it reflects how important it is to adapt in an industry that is constantly reshaping itself, and how crucial it is to stay flexible as new tools, frameworks, and methods emerge.

Inside My Exam Experience

The exam consists of 50 questions, with a 90-minute time limit and a passing score of 68%. This is slightly tougher than the 2024 version (1Z0–1127–24), which had 40 questions and a 65% passing score.

I scheduled my exam one day in advance. When the time came, I checked in 30 minutes early. Oracle redirected me to Proctorio, where I verified my identity using my webcam and ID card. If you’re planning to take the exam, make sure your computer has admin access, a working webcam, and a valid form of identification (ID card, passport, or driver’s license).

Once verified, I was redirected to the exam itself. The questions were noticeably harder than the practice tests and skill checks. In my case, only three questions were repeated. The rest were new, and some were very specific, the kind of detail you’d only know if you had practiced in labs. For example, one question focused on the parameters of the JSONL dataset used for fine-tuned models. (the correct answer is prompt and “completion”).

Another thing I noticed is that many questions give you two obviously wrong options, but two answers that are so close they both seem correct.

My key advice here, aside from carefully analyzing each choice, is to always check the context first. Let me give you my case as an example.

I faced a question asking about the key advantages of RAG compared to fine-tuning and prompt engineering. Two of the answers made sense, but one of them was specific to RAG combined with fine-tuning, not RAG compared to Fine tuning + prompt engineering. Since the question asked about RAG versus both fine-tuning and prompt engineering, I had to go with the second option, the one that matched both the context and the correct statements.

Course Content at a Glance

The Generative AI Professional (1Z0-1127-25) course and exam are built around the main concepts that engineers need when working with large language models and their applications. While the content focuses more on the OCI Generative AI, most of the material reflects general AI practices that can be applied in different environments.

Here’s what you’ll learn:

- Fundamentals of LLMs: Explore transformer architectures, prompt design, and methods to fine-tune models effectively.

- OCI Generative AI Services: Learn to use prebuilt chat and embedding models, spin up AI clusters for inference and fine-tuning, and deploy model endpoints.

- Implementing RAG Workflows: Understand how to integrate Oracle Database 23ai with LangChain, embed and index document chunks, perform similarity searches, and generate AI responses.

- Generating AI Agents: Build knowledge bases, deploy RAG agents, and leverage chat interfaces to create AI agents that can interact with users.

Compared to the AI Foundations Associate, this Professional-level exam goes much deeper, shifting from surface concepts to hands-on design and deployment scenarios.

Essential Technical Knowledge

While following the course, you’ll come across a large amount of information and many technical terms. Most of these are explained more than once during the lessons, so it’s useful to take notes as you go. It also helps if you can read Python code, since many examples in the videos and labs rely on it.

Nonetheless, the table below summarizes the key technical terms you’ll encounter and can serve as a handy study reference.

| Term | Explanation |

|---|---|

| Groundedness | The model’s output aligns with factual and reliable information, avoiding hallucination—that is, made-up or incorrect details. |

| Hallucination | When an AI confidently generates incorrect or fabricated facts. Important counterpoint to groundedness. |

| Large Language Models (LLMs) | Transformer-based models (like GPT) that handle text generation, understanding, summarization, etc. |

| Encoders / Decoders | Encoders compress input into representations. Decoders generate text step by step from that representation. |

| Prompt Engineering | Crafting inputs (prompts) to steer model outputs. Includes strategies like k-shot, chain-of-thought, least-to-most, step-back prompting. |

| Bad Prompting (Prompt Injection) | When prompts attempt to bypass model guards or safety filters. A risky “bad habit” to avoid. |

| Fine-Tuning | Adapting a pre-trained model by adjusting all its parameters on task-specific data. |

| PEFT (Parameter-Efficient Fine-Tuning) | Fine-tuning that updates only a subset of parameters—for efficiency. |

| LoRA (Low-Rank Adaptation) | A PEFT method that adds small adapter layers instead of modifying the full model. |

| T-Few | Another PEFT method that selectively updates parts of a model for task adaptation. |

| Retrieval-Augmented Generation (RAG) | Combines LLMs with external data retrieval for grounded outputs. |

| RAG Token vs RAG Sequence | RAG Token: new doc per token. RAG Sequence: same doc for the whole output. |

| RAG Pipeline | Workflow for RAG: retrieve docs → embed → retrieve similar docs → generate answer with context. or : Ingestion -> Retrieval -> Generation |

| Vector Embedding & Semantic Search | Converts text to vectors and retrieves contextually similar content using embeddings. |

| Dot Product vs Cosine Similarity | Dot product includes magnitude; cosine looks only at direction. Used for vector comparisons. |

| Embedding | Numeric representation of a text or item in vector form. |

| LangChain | A Python framework for chaining LLM flows: memory, prompts, data retrieval, tracing. |

| LangChain Memory Types | Examples: ConversationBufferMemory, TokenBufferMemory, SummaryMemory; help maintain conversation context. |

| Dedicated AI Cluster | Isolated GPU cluster in OCI for model training or inference. |

| Endpoint | A stable model service endpoint where your fine-tuned model is deployed. |

| Knowledge Base | Structured external data (e.g. FAQ, docs) used in combination with RAG for richer responses. |

| Hosting | Serving infrastructure for deployed models on OCI. |

| Chat Models | LLMs tuned for conversational behavior (chatbots, like ChatGPT). |

| Diffusion Models | Generative models better suited for image creation from text prompts. |

| Generative AI Agents | AI systems that autonomously perform tasks or interact using LLM logic. |

| OCI Security Measures | OCI-specific protections: encrypted storage, isolated AI compute clusters, IAM. |

| Temperature | Adjusts randomness in output: higher = more creative; lower = more predictable. |

| Top-p (Nucleus Sampling) | Selects the next token from the smallest group whose combined probability meets the threshold p. for example, if p = 0.9, it samples from tokens whose probabilities sum to 90%. |

| Top-k Sampling | Picks next token from top k most probable tokens. |

| Seed | Initial value for randomness; identical seed + same input → same output. Useful for reproducibility. |

| Preamble | Introductory text before primary prompt, used to set model context or instructions. |

Additionally, I came across this GitHub repository that made much detailed note on every module of the course, shoutout to Leon Silva.

My Overall Experience

I think this certification truly deserves the time and effort. I spent an entire week preparing for it, though I already had some prior knowledge before starting the course.

Normally the exam costs $250, but since I joined during the Race to Completion period, I received two free vouchers. I used one for this exam and still have another available until October 31, 2025.

I was beyond happy when I saw that I had passed, and even more excited knowing I can now apply what I learned, both in my robotics projects and in conversations about AI.

So if you’re looking for me, I’ll probably be staring at HuggingFace from now on with a Jetson Nano in my hands.

No comments yet